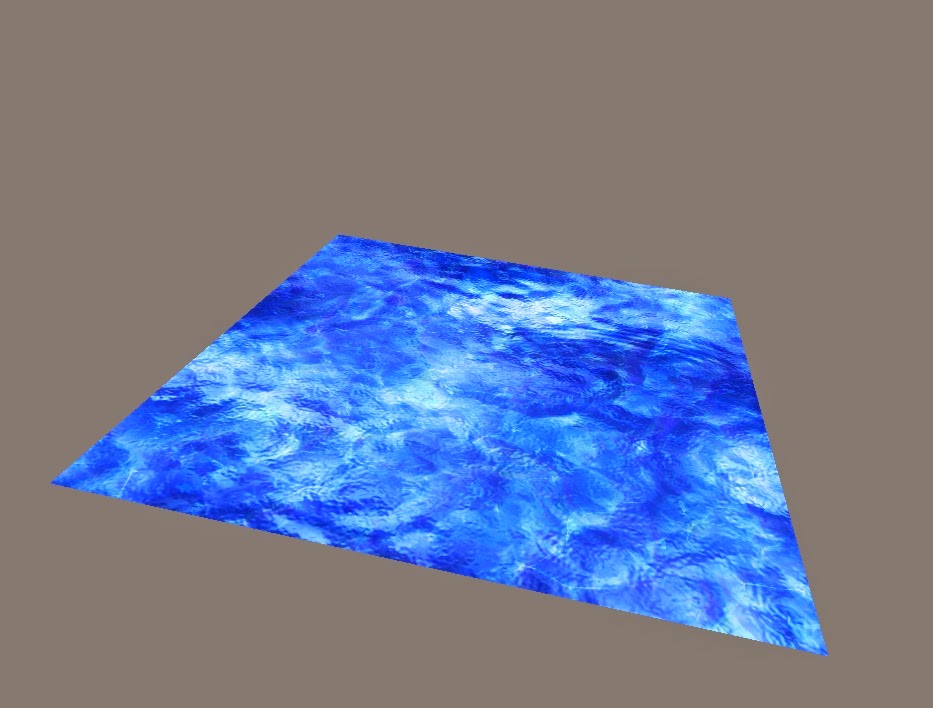

This past week was very busy with many deadlines quickly approaching. I spent most of the week only working on my water effects for homework, so this blog post will be about that. I’ll start off with showing off the final result:

I’m really happy about the way it turned out. Maybe next semester I’ll implement it into our game but I would have to change the style a bit. This water looks too realistic for our game’s art style.

The entire process requires 3 rendering passes.

The first pass renders the reflection texture. In order to do that a 2nd camera is added. The reflection camera is positioned at the regular camera’s position and scaled by -1 in the Y direction. The point the camera is looking at is also scaled by -1 in the Y direction. All objects below the water are clipped from the scene. The scene from that camera perspective is stored in a framebuffer object.

The second pass is to render the refraction texture. It is similar to the reflection pass except from the main camera’s perspective and all objects above the water are clipped from the scene. The scene is then stored to another framebuffer object.

The final pass is when the water is actually rendered. The reflection and refraction textures are passed into the water fragment shader, as well as a bunch of other uniforms including 2 other textures (normal map and dudv), and camera direction. In the vertex shader not much is done except preparing variables for the fragment shader and displacing vertices based on the colours from the normal map. The fragment shader is where everything happens. The reflection and refraction maps are sampled for their colour. The uv’s are also distorted based on a constant distortion value set in the shader. The camera direction is used in the fresnel reflection so that when you are looking directly at the water it looks more transparent than when you are looking away from it.

A few mistakes that I made while trying to complete this question were:

The reflection camera position. At first I was just making it upside down instead of scaling it by -1. By setting the orientation to upside down the objects ended up on the wrong side. I also forgot to scale the lookat position as well so reflections didn’t show up where they should be.

Rendering order. Sometimes when I would run the program the objects in the reflection would end up behind the skybox or disappear when the skybox went over them. I fixed this by making sure the objects were the last things to be rendered during the render to texture passes.

Overall I am quite happy with the water effect I was able to create. Hopefully I can implement it into our game sometime next semester.

.jpg)

.jpg)